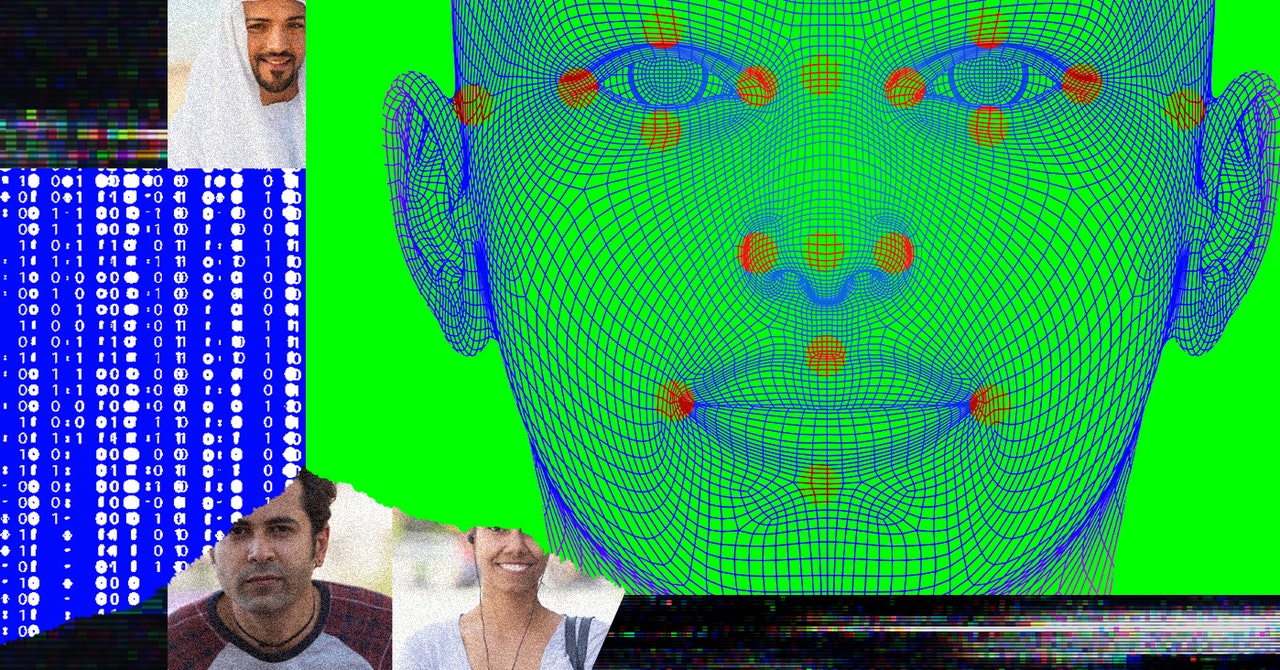

Armed with a belief in technology’s generative potential, a growing faction of researchers and companies aims to solve the problem of bias in AI by creating artificial images of people of color. Proponents argue that AI-powered generators can rectify the diversity gaps in existing image databases by supplementing them with synthetic images. Some researchers are using machine learning architectures to map existing photos of people onto new races in order to “balance the ethnic distribution” of datasets. Others, like Generated Media and Qoves Lab, are using similar technologies to create entirely new portraits for their image banks, “building … faces of every race and ethnicity,” as Qoves Lab puts it, to ensure a “truly fair facial dataset.” As they see it, these tools will resolve data biases by cheaply and efficiently producing diverse images on command.

The issue that these technologists are looking to fix is a critical one. AIs are riddled with defects, unlocking phones for the wrong person because they can’t tell Asian faces apart, falsely accusing people of crimes they did not commit, and mistaking darker-skinned people for gorillas. These spectacular failures aren’t anomalies, but rather inevitable consequences of the data AIs are trained on, which for the most part skews heavily white and male—making these tools imprecise instruments for anyone who doesn’t fit this narrow archetype. In theory, the solution is straightforward: We just need to cultivate more diverse training sets. Yet in practice, it’s proven to be an incredibly labor-intensive task thanks to the scale of inputs such systems require, as well as the extent of the current omissions in data (research by IBM, for example, revealed that six out of eight prominent facial datasets were composed of over 80 percent lighter-skinned faces). That diverse datasets might be created without manual sourcing is, therefore, a tantalizing possibility.

As we look closer at the ways that this proposal might impact both our tools and our relationship to them however, the long shadows of this seemingly convenient solution begin to take frightening shape.

Computer vision has been in development in some form since the mid-20th century. Initially, researchers attempted to build tools top-down, manually defining rules (“human faces have two symmetrical eyes”) to identify a desired class of images. These rules would be converted into a computational formula, then programmed into a computer to help it search for pixel patterns that corresponded to those of the described object. This approach, however, proved largely unsuccessful given the sheer variety of subjects, angles, and lighting conditions that could constitute a photo— as well as the difficulty of translating even simple rules into coherent formulae.

Over time, an increase in publicly available images made a more bottom-up process via machine learning possible. With this methodology, mass aggregates of labeled data are fed into a system. Through “supervised learning,” the algorithm takes this data and teaches itself to discriminate between the desired categories designated by researchers. This technique is much more flexible than the top-down method since it doesn’t rely on rules that might vary across different conditions. By training itself on a variety of inputs, the machine can identify the relevant similarities between images of a given class without being told explicitly what those similarities are, creating a much more adaptable model.

Still, the bottom-up method isn’t perfect. In particular, these systems are largely bounded by the data they’re provided. As the tech writer Rob Horning puts it, technologies of this kind “presume a closed system.” They have trouble extrapolating beyond their given parameters, leading to limited performance when faced with subjects they aren’t well trained on; discrepancies in data, for example, led Microsoft’s FaceDetect to have a 20 percent error rate for darker-skinned women, while its error rate for white males hovered around 0 percent. The ripple effects of these training biases on performance are the reason that technology ethicists began preaching the importance of dataset diversity, and why companies and researchers are in a race to solve the problem. As the popular saying in AI goes, “garbage in, garbage out.”

This maxim applies equally to image generators, which also require large datasets to train themselves in the art of photorealistic representation. Most facial generators today employ Generative Adversarial Networks (or GANs) as their foundational architecture. At their core, GANs work by having two networks, a Generator and a Discriminator, in play with each other. While the Generator produces images from noise inputs, a Discriminator attempts to sort the generated fakes from the real images provided by a training set. Over time, this “adversarial network” enables the Generator to improve and create images that a Discriminator is unable to identify as a fake. The initial inputs serve as the anchor to this process. Historically, tens of thousands of these images have been required to produce sufficiently realistic results, indicating the importance of a diverse training set in the proper development of these tools.

#Fake #Pictures #People #Color #Wont #Fix #Bias